This document explains how to use Google Kubernetes Engine (GKE) to deploy a distributed load testing framework that uses multiple containers to create traffic for a simple REST-based API. This document load-tests a web application deployed to App Engine that exposes REST-style endpoints to respond to incoming HTTP POST requests.

You can use this same pattern to create load testing frameworks for a variety of scenarios and applications, such as messaging systems, data stream management systems, and database systems.

Objectives

- Define environment variables to control deployment configuration.

- Create a GKE cluster.

- Perform load testing.

- Optionally scale up the number of users or extend the pattern to other use cases.

Costs

In this document, you use the following billable components of Google Cloud:

- App Engine

- Artifact Registry

- Cloud Build

- Cloud Storage

- Google Kubernetes Engine

To generate a cost estimate based on your projected usage,

use the pricing calculator.

Before you begin

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

Enable the App Engine, Artifact Registry, Cloud Build, Compute Engine, Resource Manager, Google Kubernetes Engine, and Identity and Access Management APIs.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

Enable the App Engine, Artifact Registry, Cloud Build, Compute Engine, Resource Manager, Google Kubernetes Engine, and Identity and Access Management APIs.

-

Grant roles to your user account. Run the following command once for each of the following IAM roles:

roles/serviceusage.serviceUsageAdmin, roles/container.admin, roles/appengine.appAdmin, roles/appengine.appCreator, roles/artifactregistry.admin, roles/resourcemanager.projectIamAdmin, roles/compute.instanceAdmin.v1, roles/iam.serviceAccountUser, roles/cloudbuild.builds.builder, roles/iam.serviceAccountAdmingcloud projects add-iam-policy-binding PROJECT_ID --member="user:USER_IDENTIFIER" --role=ROLE

- Replace

PROJECT_IDwith your project ID. -

Replace

USER_IDENTIFIERwith the identifier for your user account. For example,user:myemail@example.com. - Replace

ROLEwith each individual role.

- Replace

When you finish the tasks that are described in this document, you can avoid continued billing by deleting the resources that you created. For more information, see Clean up.

Example workload

The following diagram shows an example workload where requests go from client to application.

To model this interaction, you can use Locust,

a distributed, Python-based load testing tool that can distribute

requests across multiple target paths. For example, Locust can distribute

requests to the /login and /metrics target paths. The workload is modeled as a set of

tasks

in Locust.

Architecture

This architecture involves two main components:

- The Locust Docker container image.

- The container orchestration and management mechanism.

The Locust Docker container image contains the Locust software. The Dockerfile, which you get when you clone the GitHub repository that accompanies this document, uses a base Python image and includes scripts to start the Locust service and execute the tasks. To approximate real-world clients, each Locust task is weighted. For example, registration happens once per thousand total client requests.

GKE provides container orchestration and management. With GKE, you can specify the number of container nodes that provide the foundation for your load testing framework. You can also organize your load testing workers into Pods, and specify how many Pods you want GKE to keep running.

To deploy the load testing tasks, you do the following:

- Deploy a load testing primary, which is referred to as a master by Locust.

- Deploy a group of load testing workers. With these load testing workers, you can create a substantial amount of traffic for testing purposes.

The following diagram shows the architecture that demonstrates load testing using a sample application. The master Pod serves the web interface used to operate and monitor load testing. The worker Pods generate the REST request traffic for the application undergoing test, and send metrics to the master.

About the load testing master

The Locust master is the entry point for executing the load testing tasks. The Locust master configuration specifies several elements, including the default ports used by the container:

8089for the web interface5557and5558for communicating with workers

This information is later used to configure the Locust workers.

You deploy a Service to ensure that the necessary ports are accessible

to other Pods within the cluster through hostname:port. These ports are

also referenceable through a descriptive port name.

This Service allows the Locust workers to easily discover and reliably communicate with the master, even if the master fails and is replaced with a new Pod by the Deployment.

A second Service is deployed with the necessary annotation to create an internal passthrough Network Load Balancer that makes the Locust web application Service accessible to clients outside of your cluster that use the same VPC network and are located in the same Google Cloud region as your cluster.

After you deploy the Locust master, you can open the web interface using the internal IP address provisioned by the internal passthrough Network Load Balancer. After you deploy the Locust workers, you can start the simulation and look at aggregate statistics through the Locust web interface.

About the load testing workers

The Locust workers execute the load testing tasks. You use a single Deployment to create multiple Pods. The Pods are spread out across the Kubernetes cluster. Each Pod uses environment variables to control configuration information, such as the hostname of the system under test and the hostname of the Locust master.

The following diagram shows the relationship between the Locust master and the Locust workers.

Initialize common variables

You must define several variables that control where elements of the infrastructure are deployed.

Open Cloud Shell:

You run all the terminal commands in this document from Cloud Shell.

Set the environment variables that require customization:

export GKE_CLUSTER=GKE_CLUSTER export AR_REPO=AR_REPO export REGION=REGION export ZONE=ZONE export SAMPLE_APP_LOCATION=SAMPLE_APP_LOCATION

Replace the following:

GKE_CLUSTER: the name of your GKE cluster.AR_REPO: the name of your Artifact Registry repositoryREGION: the region where your GKE cluster and Artifact Registry repository will be createdZONE: the zone in your region where your Compute Engine instance will be createdSAMPLE_APP_LOCATION: the (regional) location where your sample App Engine application will be deployed

The commands should look similar to the following example:

export GKE_CLUSTER=gke-lt-cluster export AR_REPO=dist-lt-repo export REGION=us-central1 export ZONE=us-central1-b export SAMPLE_APP_LOCATION=us-central

Set the following additional environment variables:

export GKE_NODE_TYPE=e2-standard-4 export GKE_SCOPE="https://www.googleapis.com/auth/cloud-platform" export PROJECT=$(gcloud config get-value project) export SAMPLE_APP_TARGET=${PROJECT}.appspot.comSet the default zone so you don't have to specify these values in subsequent commands:

gcloud config set compute/zone ${ZONE}

Create a GKE cluster

Create a service account with the minimum permissions required by the cluster:

gcloud iam service-accounts create dist-lt-svc-acc gcloud projects add-iam-policy-binding ${PROJECT} --member=serviceAccount:dist-lt-svc-acc@${PROJECT}.iam.gserviceaccount.com --role=roles/artifactregistry.reader gcloud projects add-iam-policy-binding ${PROJECT} --member=serviceAccount:dist-lt-svc-acc@${PROJECT}.iam.gserviceaccount.com --role=roles/container.nodeServiceAccountCreate the GKE cluster:

gcloud container clusters create ${GKE_CLUSTER} \ --service-account=dist-lt-svc-acc@${PROJECT}.iam.gserviceaccount.com \ --region ${REGION} \ --machine-type ${GKE_NODE_TYPE} \ --enable-autoscaling \ --num-nodes 3 \ --min-nodes 3 \ --max-nodes 10 \ --scopes "${GKE_SCOPE}"Connect to the GKE cluster:

gcloud container clusters get-credentials ${GKE_CLUSTER} \ --region ${REGION} \ --project ${PROJECT}

Set up the environment

Clone the sample repository from GitHub:

git clone https://github.com/GoogleCloudPlatform/distributed-load-testing-using-kubernetes

Change your working directory to the cloned repository:

cd distributed-load-testing-using-kubernetes

Build the container image

Create an Artifact Registry repository:

gcloud artifacts repositories create ${AR_REPO} \ --repository-format=docker \ --location=${REGION} \ --description="Distributed load testing with GKE and Locust"

Build the container image and store it in your Artifact Registry repository:

export LOCUST_IMAGE_NAME=locust-tasks export LOCUST_IMAGE_TAG=latest gcloud builds submit \ --tag ${REGION}-docker.pkg.dev/${PROJECT}/${AR_REPO}/${LOCUST_IMAGE_NAME}:${LOCUST_IMAGE_TAG} \ docker-imageThe accompanying Locust Docker image embeds a test task that calls the

/loginand/metricsendpoints in the sample application. In this example test task set, the respective ratio of requests submitted to these two endpoints will be1to999.Verify that the Docker image is in your Artifact Registry repository:

gcloud artifacts docker images list ${REGION}-docker.pkg.dev/${PROJECT}/${AR_REPO} | \ grep ${LOCUST_IMAGE_NAME}The output is similar to the following:

Listing items under project

PROJECT, locationREGION, repositoryAR_REPOREGION-docker.pkg.dev/PROJECT/AR_REPO/locust-tasks sha256:796d4be067eae7c82d41824791289045789182958913e57c0ef40e8d5ddcf283 2022-04-13T01:55:02 2022-04-13T01:55:02

Deploy the sample application

Create and deploy the sample-webapp as App Engine:

gcloud app create --region=${SAMPLE_APP_LOCATION} gcloud app deploy sample-webapp/app.yaml \ --project=${PROJECT}When prompted, type

yto proceed with deployment.The output is similar to the following:

File upload done. Updating service [default]...done. Setting traffic split for service [default]...done. Deployed service [default] to [https://

PROJECT.appspot.com]The sample App Engine application implements

/loginand/metricsendpoints:

Deploy the Locust master and worker Pods

Substitute the environment variable values for target host, project, and image parameters in the

locust-master-controller.yamlandlocust-worker-controller.yamlfiles, and create the Locust master and worker Deployments:envsubst < kubernetes-config/locust-master-controller.yaml.tpl | kubectl apply -f - envsubst < kubernetes-config/locust-worker-controller.yaml.tpl | kubectl apply -f - envsubst < kubernetes-config/locust-master-service.yaml.tpl | kubectl apply -f -

Verify the Locust Deployments:

kubectl get pods -o wide

The output looks something like the following:

NAME READY STATUS RESTARTS AGE IP NODE locust-master-87f8ffd56-pxmsk 1/1 Running 0 1m 10.32.2.6 gke-gke-load-test-default-pool-96a3f394 locust-worker-58879b475c-279q9 1/1 Running 0 1m 10.32.1.5 gke-gke-load-test-default-pool-96a3f394 locust-worker-58879b475c-9frbw 1/1 Running 0 1m 10.32.2.8 gke-gke-load-test-default-pool-96a3f394 locust-worker-58879b475c-dppmz 1/1 Running 0 1m 10.32.2.7 gke-gke-load-test-default-pool-96a3f394 locust-worker-58879b475c-g8tzf 1/1 Running 0 1m 10.32.0.11 gke-gke-load-test-default-pool-96a3f394 locust-worker-58879b475c-qcscq 1/1 Running 0 1m 10.32.1.4 gke-gke-load-test-default-pool-96a3f394Verify the Services:

kubectl get services

The output looks something like the following:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.87.240.1 <none> 443/TCP 12m locust-master ClusterIP 10.87.245.22 <none> 5557/TCP,5558/TCP 1m locust-master-web LoadBalancer 10.87.246.225 <pending> 8089:31454/TCP 1mRun a watch loop while the internal passthrough Network Load Balancer's internal IP address (GKE external IP address) is provisioned for the Locust master web application Service:

kubectl get svc locust-master-web --watch

Press

Ctrl+Cto exit the watch loop once an EXTERNAL-IP address is provisioned.

Connect to Locust web frontend

You use the Locust master web interface to execute the load testing tasks against the system under test.

Make a note of the internal load balancer IP address of the web host service:

export INTERNAL_LB_IP=$(kubectl get svc locust-master-web \ -o jsonpath="{.status.loadBalancer.ingress[0].ip}") && \ echo $INTERNAL_LB_IPDepending on your network configuration, there are two ways that you can connect to the Locust web application through the provisioned IP address:

Network routing. If your network is configured to allow routing from your workstation to your project VPC network, you can directly access the internal passthrough Network Load Balancer IP address from your workstation.

Proxy & SSH tunnel. If there is not a network route between your workstation and your VPC network, you can route traffic to the internal passthrough Network Load Balancer's IP address by creating a Compute Engine instance with an

nginxproxy and an SSH tunnel between your workstation and the instance.

Network routing

If there is a route for network traffic between your workstation and your Google Cloud project VPC network, open your browser and then open the Locust master web interface. To open the Locust interface, go to the following URL:

http://INTERNAL_LB_IP:8089

Replace INTERNAL_LB_IP with the URL and IP address that you noted in the previous step.

Proxy & SSH tunnel

Set an environment variable with the name of the instance.

export PROXY_VM=locust-nginx-proxy

Start an instance with a

ngnixdocker container configured to proxy the Locust web application port8089on the internal passthrough Network Load Balancer:gcloud compute instances create-with-container ${PROXY_VM} \ --zone ${ZONE} \ --container-image gcr.io/cloud-marketplace/google/nginx1:latest \ --container-mount-host-path=host-path=/tmp/server.conf,mount-path=/etc/nginx/conf.d/default.conf \ --metadata=startup-script="#! /bin/bash cat <<EOF > /tmp/server.conf server { listen 8089; location / { proxy_pass http://${INTERNAL_LB_IP}:8089; } } EOF"Open an SSH tunnel from Cloud Shell to the proxy instance:

gcloud compute ssh --zone ${ZONE} ${PROXY_VM} \ -- -N -L 8089:localhost:8089Click the Web Preview icon (

), and select Change Port from the options listed.

On the Change Preview Port dialog, enter 8089 in the Port Number field, and select Change and Preview.

In a moment, a browser tab will open with the Locust web interface.

Run a basic load test on your sample application

After you open the Locust frontend in your browser, you see a dialog that can be used to start a new load test.

Specify the total Number of users (peak concurrency) as

10and the Spawn rate (users started/second) as5users per second.Click Start swarming to begin the simulation.

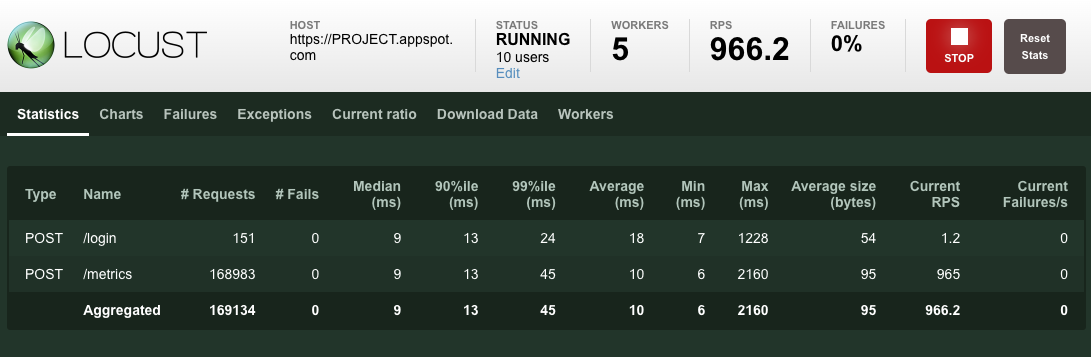

After requests start swarming, statistics begin to aggregate for simulation metrics, such as the number of requests and requests per second, as shown in the following image:

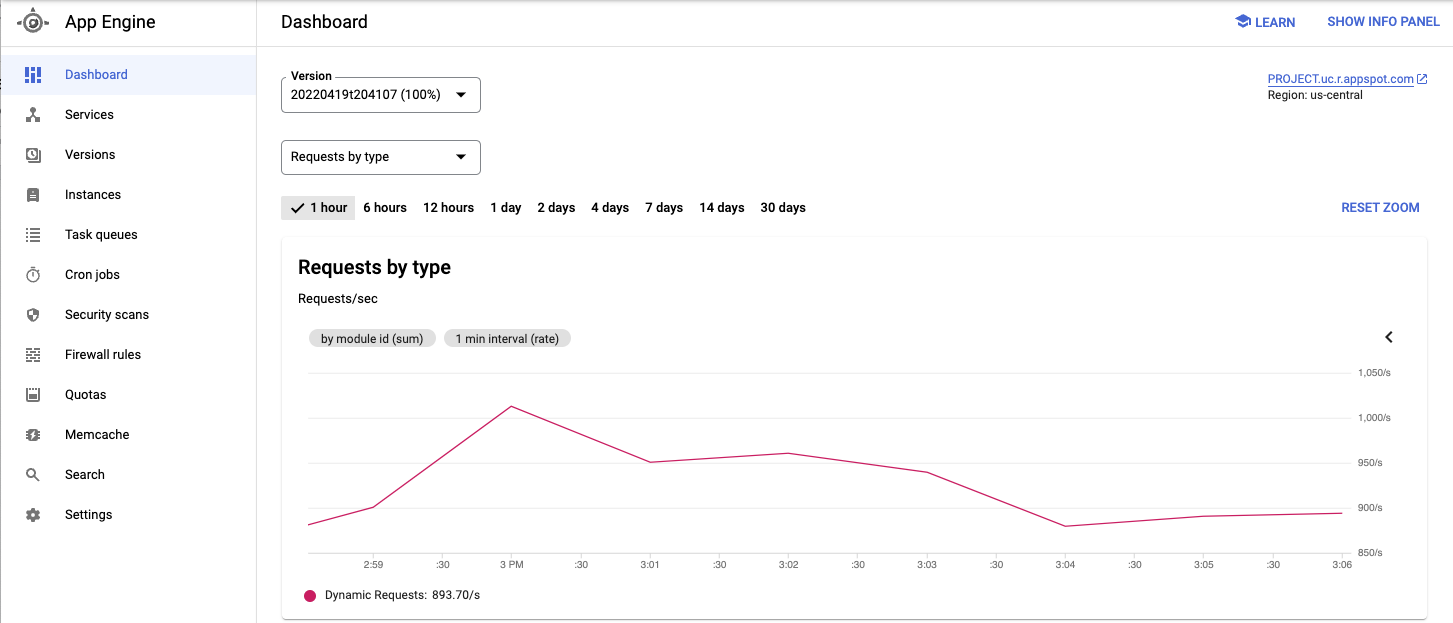

View the deployed service and other metrics from the Google Cloud console.

When you have observed the behavior of the application under test, click Stop to terminate the test.

Scale up the number of users (optional)

If you want to test increased load on the application, you can add simulated users. Before you can add simulated users, you must ensure that there are enough resources to support the increase in load. With Google Cloud, you can add Locust worker Pods to the Deployment without redeploying the existing Pods, as long as you have the underlying VM resources to support an increased number of Pods. The initial GKE cluster starts with 3 nodes and can auto-scale up to 10 nodes.

Scale the pool of Locust worker Pods to 20.

kubectl scale deployment/locust-worker --replicas=20

It takes a few minutes to deploy and start the new Pods.

If you see a Pod Unschedulable error, you must add more nodes to the cluster. For details, see resizing a GKE cluster.

After the Pods start, return to the Locust master web interface and restart load testing.

Extend the pattern

To extend this pattern, you can create new Locust tasks or even switch to a different load testing framework.

You can customize the metrics you collect. For example, you might want to measure the requests per second, or monitor the response latency as load increases, or check the response failure rates and types of errors.

For information, see the Cloud Monitoring documentation.

Clean up

To avoid incurring charges to your Google Cloud account for the resources used in this document, either delete the project that contains the resources, or keep the project and delete the individual resources.

Delete the project

- In the Google Cloud console, go to the Manage resources page.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

Delete the GKE cluster

If you don't want to delete the whole project, run the following command to delete the GKE cluster:

gcloud container clusters delete ${GKE_CLUSTER} --region ${REGION}

What's next

- Building Scalable and Resilient Web Applications.

- Review GKE documentation in more detail.

- Try tutorials on GKE.

- For more reference architectures, diagrams, and best practices, explore the Cloud Architecture Center.