Cloud Composer 3 | Cloud Composer 2 | Cloud Composer 1

This page describes the architecture of Cloud Composer environments.

Environment architecture configurations

Cloud Composer 3 environments have a single configuration that does not depend on the networking type:

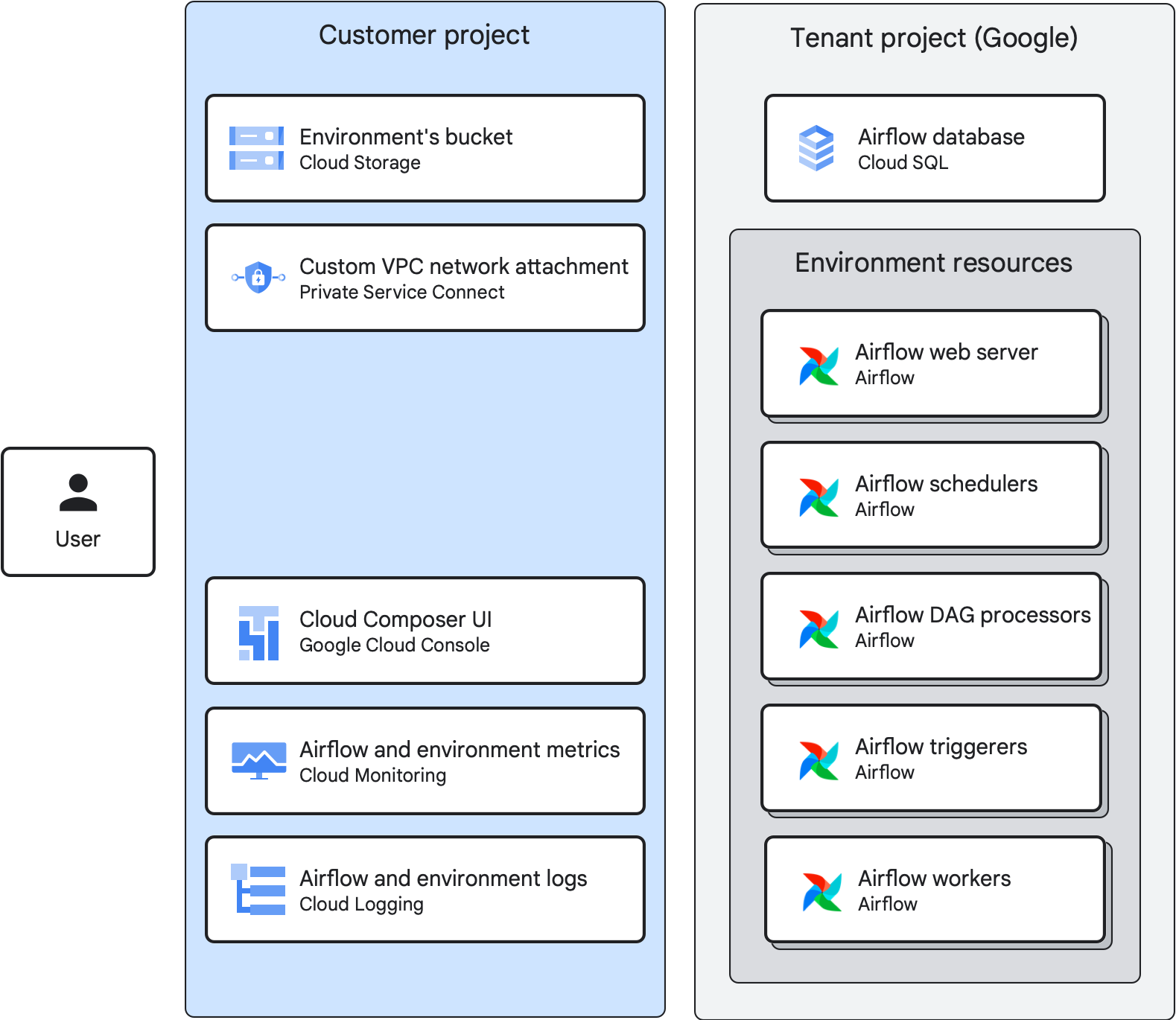

Customer and tenant projects

When you create an environment, Cloud Composer distributes the environment's resources between a tenant and a customer project:

- Customer project is a Google Cloud project where you create your environments. You can create more than one environment in a single customer project.

Tenant project is a Google-managed tenant project. Tenant project provides unified access control and an additional layer of data security to your environment. Each Cloud Composer environment has its own tenant project.

Environment components

A Cloud Composer environment consists of environment components.

An environment component is an element of a managed Airflow infrastructure that runs on Google Cloud, as a part of your environment. Environment components run either in the tenant or in the customer project of your environment.

Environment's bucket

Environment's bucket is a Cloud Storage bucket that stores DAGs, plugins, data dependencies, and Airflow logs. Environment's bucket is located in the customer project.

When you upload your DAG files to the /dags folder in your

environment's bucket, Cloud Composer synchronizes the DAGs to Airflow components of your environment.

Airflow web server

Airflow web server runs the Airflow UI of your environment.

Cloud Composer provides access to the interface based on user identities and IAM policy bindings defined for users.

Airflow database

Airflow database is a Cloud SQL instance that runs in the tenant project of your environment. It hosts the Airflow metadata database.

To protect sensitive connection and workflow information, Cloud Composer allows database access only to the service account of your environment.

Other airflow components

Other Airflow components that run in your environment are:

Airflow schedulers parse DAG definition files, schedule DAG runs based on the schedule interval, and queues tasks for execution by Airflow workers.

Airflow triggerers asynchronously monitor all deferred tasks in your environment. If you set the number of triggerers in your environment above zero, then you can use deferrable operators in your DAGs.

Airflow DAG processors process DAG files and turns them into DAG objects. In Cloud Composer 3, DAG processors run as separate environment components.

Airflow workers execute tasks that are scheduled by Airflow schedulers. The minimum and maximum number of workers in your environment changes dynamically depending on the number of tasks in the queue.

Cloud Composer 3 environment architecture

In Cloud Composer 3 environments:

- The tenant project hosts a Cloud SQL instance with the Airflow database.

- All Airflow resources run in the tenant project.

- The customer project hosts the environment's bucket.

- A custom VPC network attachment in the customer project can be used to attach the environment to a custom VPC network. You can use an existing attachment or Cloud Composer can create it automatically on demand. It is also possible to detach an environment from a VPC network.

- Google Cloud console, Monitoring, and Logging in the customer project provide ways to manage the environment, DAGs and DAG runs, and to access environment's metrics and logs. You can also use Airflow UI, Google Cloud CLI, Cloud Composer API and Terraform for the same purposes.

Integration with Cloud Logging and Cloud Monitoring

Cloud Composer integrates with Cloud Logging and Cloud Monitoring of your Google Cloud project, so that you have a central place to view Airflow and DAG logs.

Cloud Monitoring collects and ingests metrics, events, and metadata from Cloud Composer to generate insights through dashboards and charts.

Because of the streaming nature of Cloud Logging, you can view logs emitted by Airflow components immediately instead of waiting for Airflow logs to appear in the Cloud Storage bucket of your environment.

To limit the number of logs in your Google Cloud project, you can stop all logs ingestion. Do not disable Logging.