Step 1: Establish workloads

This page guides you through the initial step of setting up your data foundation, the core of Cortex Framework. Built on top of BigQuery storage, the data foundation organizes your incoming data from various sources. This organized data makes it easier to analyze and use for AI development.

Set up data integration

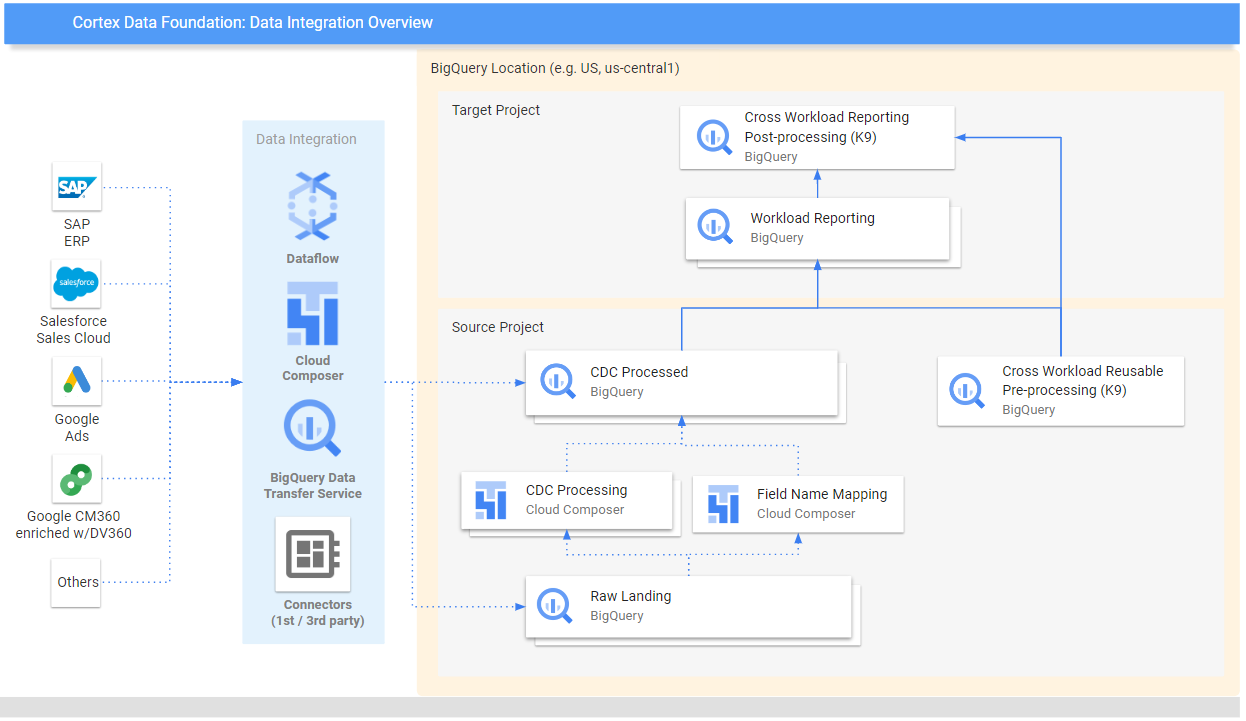

Get started by defining some key parameters to act as a blueprint for organizing and using your data efficiently within Cortex Framework. Remember, these parameters can vary depending on the specific workload, your chosen data flow, and the integration mechanism. The following diagram provides an overview of data integration within the Cortex Framework Data Foundation:

Define the following parameters before deployment for efficient and effective data utilization within Cortex Framework.

Projects

- Source project: Project where your raw data lives. You need at least one Google Cloud project to store data and run the deployment process.

- Target project (optional): Project where Cortex Framework Data Foundation stores its processed data models. This can be the same as the source project, or a different one depending on your needs.

If you want to have separate sets of projects and datasets for each workload (for example, one set of source and target projects for SAP and a different set of target and source projects for Salesforce), run separate deployments for each workload. For more information, see Using different projects to segregate access in the optional steps section.

Data model

- Deploy Models: Choose whether you need to deploy models for all workloads or only one set of models (for example, SAP, Salesforce, and Meta). For more information, see available Data sources and workloads.

BigQuery datasets

- Source Dataset (Raw): BigQuery dataset where the source data is replicated to or where the test data is created. The recommendation is to have separate datasets, one for each data source. For example, one raw dataset for SAP and one raw dataset for Google Ads. This dataset belongs to the source project.

- CDC Dataset: BigQuery dataset where the CDC processed data lands the latest available records. Some workloads allow for field name mapping. The recommendation is to have a separate CDC dataset for each source. For example, one CDC dataset for SAP, and one CDC dataset for Salesforce. This dataset belongs to the source project.

- Target Reporting Dataset: BigQuery dataset where the Data Foundation predefined data models are deployed. We recommend to have a separate reporting dataset for each source. For example, one reporting dataset for SAP and one reporting dataset for Salesforce. This dataset is automatically created during deployment if it doesn't exist. This dataset belongs to the Target project.

- Target BigQuery Machine Learning Dataset: BigQuery dataset where the BigQuery Machine Learning (BQML) predefined models are deployed. This dataset is automatically created during deployment if it doesn't exist. This dataset belongs to the Target project.

- Pre-processing K9 Dataset: BigQuery dataset where

cross-workload, reusable DAG components, such as

timedimensions, can be deployed. Workloads have a dependency on this dataset unless modified. This dataset is automatically created during deployment if it doesn't exist. This dataset belongs to the source project. - Post-processing K9 Dataset: BigQuery dataset where cross-workload reporting (for example, SAP + Google Ads reporting for CATGAP), and additional external source DAGs (for example, Google Trends ingestion) can be deployed. This dataset is automatically created during deployment if it doesn't exist. This dataset belongs to the Target project.

Optional: Generate sample data

Cortex Framework can generate sample data and tables for you if you don't have access to your own data, or replication tools to set up data, or even if you only want to see how Cortex Framework works. However, you still need to create and identify the CDC and Raw datasets ahead of time.

Create BigQuery datasets for raw data and CDC per data source, with the following instructions.

Console

Open the BigQuery page in the Google Cloud console.

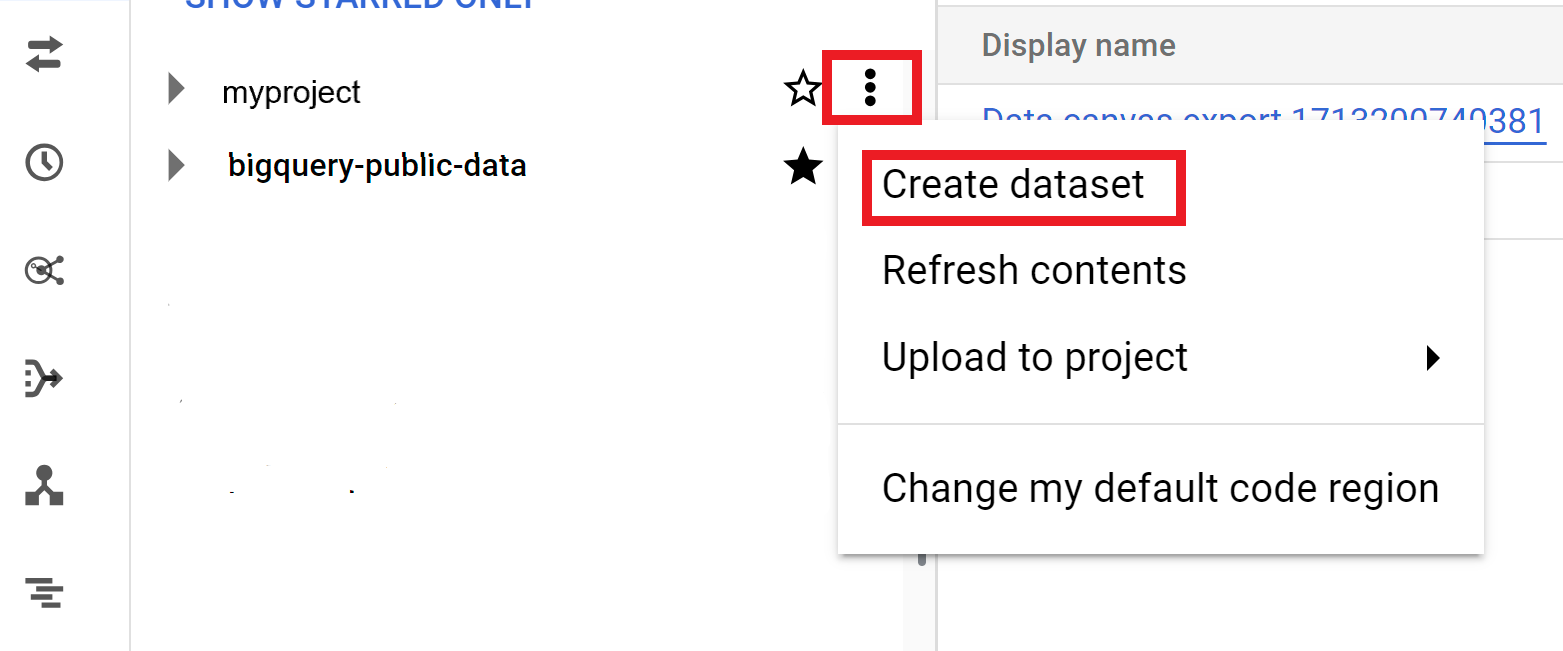

In the Explorer panel, select the project where you want to create the dataset.

Expand the Actions option and click Create dataset:

On the Create dataset page:

- For Dataset ID, enter a unique dataset name.

For Location type, choose a geographic location for the dataset. After a dataset is created, the location can't be changed.

Optional. For more customization details for your dataset, see Create datasets: Console.

Click Create dataset.

bq

Create a new dataset for raw data by copying the following command:

bq --location= LOCATION mk -d SOURCE_PROJECT: DATASET_RAWReplace the following:

LOCATIONwith the dataset's location.SOURCE_PROJECTwith your source project ID.DATASET_RAWwith the name for your dataset for raw data. For example,CORTEX_SFDC_RAW.

Create a new dataset for CDC data by copying the following command:

bq --location=LOCATION mk -d SOURCE_PROJECT: DATASET_CDCReplace the following:

LOCATIONwith the dataset's location.SOURCE_PROJECTwith your source project ID.DATASET_CDCwith the name for your dataset for CDC data. For example,CORTEX_SFDC_CDC.

Confirm that the datasets were created with the following command:

bq lsOptional. For more information about creating datasets, see Create datasets.

Next steps

After you complete this step, move on to the following deployment steps:

- Establish workloads (this page).

- Clone repository.

- Determine integration mechanism.

- Set up components.

- Configure deployment.

- Execute deployment.