You can use either the Google Cloud console or the Vertex AI SDK for Python to add a pipeline run to either an experiment or an experiment run.

Google Cloud console

Use the following instructions to run an ML pipeline and associate the pipeline with an experiment and, optionally, an experiment run using Google Cloud console. Experiment runs can only be created through the Vertex AI SDK for Python (see Create and manage experiment runs).- In the Google Cloud console, in the Vertex AI section, go

to the Pipelines page.

Go to Pipelines - In the Region drop-down list, select the region that you want to create a pipeline run in.

- Click Create run to open the Create pipeline run pane.

- Specify the following Run details.

- In the File field, click Choose to open the file selector. Navigate to the compiled pipeline JSON file that you want to run, select the pipeline, and click Open.

- The Pipeline name defaults to the name that you specified in the pipeline definition. Optionally, specify a different Pipeline name.

- Specify a Run name to uniquely identify this pipeline run.

- To specify that this pipeline run uses a custom service account, a

customer-managed encryption key, or a peered VPC network, click

Advanced options (Optional).

Use the following instructions to configure advanced options such as a custom service account.- To specify a service account,

select a service account from the Service account drop-down list.

If you do not specify a service account, Vertex AI Pipelines runs your pipeline using the default Compute Engine service account.

Learn more about configuring a service account for use with Vertex AI Pipelines. - To use a customer managed encryption key (CMEK), select Use a customer-managed encryption key. The Select a customer-managed key drop-down list appears. In the Select a customer-managed key drop-down list, select the key that you want to use.

- To use a peered VPC network in this pipeline run, enter the VPC network name in the Peered VPC network box.

- To specify a service account,

select a service account from the Service account drop-down list.

- Click Continue.

The Cloud Storage location and Pipeline parameters pane appears. - Required: Enter the Cloud Storage output directory, for example: gs://location_of_directory.

- Optional: Specify the parameters that you want to use for this pipeline run.

- Click Submit to create your pipeline run.

- After the Pipeline is submitted, it appears in the Pipeline's Google Cloud console table.

- In the row associated with your pipeline click View more > Add to Experiment

- Select an existing Experiment or create a new one.

- Optional: If Experiment runs are associated with the Experiment, they show up in the drop-down. Select an existing Experiment run.

- Click Save.

Compare a pipeline run with experiment runs using the Google Cloud console

- In the Google Cloud console, go to the Experiments page.

Go to Experiments.

A list of experiments appears in the Experiments page. - Select the experiment you want to add your pipeline run to.

A list of runs appears. - Select the runs you want to compare, then click Compare

- Click the Add run button. A list of runs appears

- Select the pipeline run you want to add. The run is added.

Vertex AI SDK for Python {:#sdk-add-pipeline-run}

The following samples use the PipelineJob API.

Associate pipeline run with an experiment

This sample shows how to associate a pipeline run with an experiment. When you want to compare

Pipeline runs, you should associate your pipeline run(s) to an experiment. See

init

in the Vertex AI SDK for Python reference documentation.

Vertex AI SDK for Python

experiment_name: Provide a name for your experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.pipeline_job_display_name: The user-defined name of this Pipeline.template_path: The path of PipelineJob or PipelineSpec JSON or YAML file. It can be a local path or a Cloud Storage URI. Example: "gs://project.name"pipeline_root: The root of the pipeline outputs. Default to be staging bucket.parameter_values: The mapping from runtime parameter names to its values that control the pipeline run.project: Your project ID. You can find these IDs in the Google Cloud console welcome page.location: See List of available locations.

Associate pipeline run with experiment run

The sample provided includes associating a pipeline run with an experiment run.

Use cases:

- When doing local model training and then running evaluation on that model (evaluation is done by using a pipeline). In this case you'd want to write the eval metrics from your pipeline run to an ExperimentRun

- When re-running the same pipeline multiple times. For example, if you change the input parameters, or if one component fails and you need to run it again.

When associating a pipeline run to an experiment run, parameters and metrics are not automatically surfaced and need to be logged manually using the logging APIs.

Note: When the optional resume parameter is specified as TRUE,

the previously started run resumes. When not specified, resume defaults to

FALSE and a new run is created.

See

init,

start_run, and

log

in the Vertex AI SDK for Python reference documentation.

Vertex AI SDK for Python

experiment_name: Provide a name for your experiment. You can find your list of experiments in the Google Cloud console by selecting Experiments in the section nav.run_name: Specify a run name.pipeline_job: A Vertex AI PipelineJobproject: Your project ID. You can find these in the Google Cloud console welcome page.location: See List of available locations

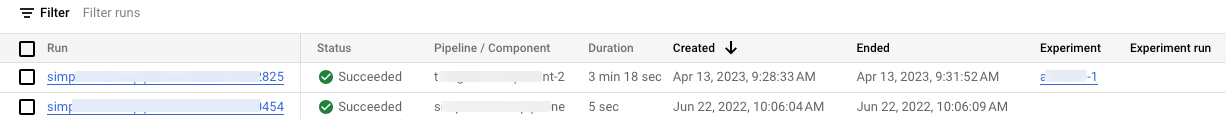

View list of pipeline runs in Google Cloud console

In the Google Cloud console, in the Vertex AI section, go to the Pipelines page.

Check to be sure you are in the correct project.

A list of experiments and runs associated with your project's pipeline runs appears in the Experiment and Experiment run columns, respectively.

Codelab

Make the Most of Experimentation: Manage Machine Learning Experiments with Vertex AI

This codelab involves using Vertex AI to build a pipeline that trains a custom Keras Model in TensorFlow. Vertex AI Experiments is used to track and compare experiment runs in order to identify which combination of hyperparameters results in the best performance.