Deploy a Dataproc Metastore service

This page shows you how to create a Dataproc Metastore service and connect to it from a Dataproc cluster. After, you SSH into the cluster, launch an instance of Apache Hive, and run some basic queries.

Dataproc Metastore provides you with a fully compatible Hive Metastore (HMS), which is the established standard in the open source big data ecosystem for managing technical metadata. This service helps you manage the metadata of your data lakes and provides interoperability between the various data processing tools you're using.

To follow step-by-step guidance for this task directly in the Google Cloud console, click Guide me:

Before you begin

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

Enable the Dataproc Metastore, Dataproc APIs.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

Enable the Dataproc Metastore, Dataproc APIs.

Required Roles

To get the permissions that you need to create a Dataproc Metastore and a Dataproc cluster, ask your administrator to grant you the following IAM roles:

-

To grant full access to all Dataproc Metastore resources, including setting IAM permissions:

(

roles/metastore.admin) on the user account or service account -

To grant full control of Dataproc Metastore resources:

Dataproc Metastore Editor (

roles/metastore.editor) on the user account or service account -

To create a Dataproc cluster:

(

roles/dataproc.worker) on the service account

For more information about granting roles, see Manage access to projects, folders, and organizations.

These predefined roles contain the permissions required to create a Dataproc Metastore and a Dataproc cluster. To see the exact permissions that are required, expand the Required permissions section:

Required permissions

The following permissions are required to create a Dataproc Metastore and a Dataproc cluster:

-

To create a Dataproc Metastore service:

metastore.services.createon the user account or service account -

To create a Dataproc cluster:

Dataproc worker (on on the service accountroles/dataproc.worker)

You might also be able to get these permissions with custom roles or other predefined roles.

For more information about specific Dataproc Metastore roles and permissions, see Dataproc Metastore IAM overview.Create a Dataproc Metastore service

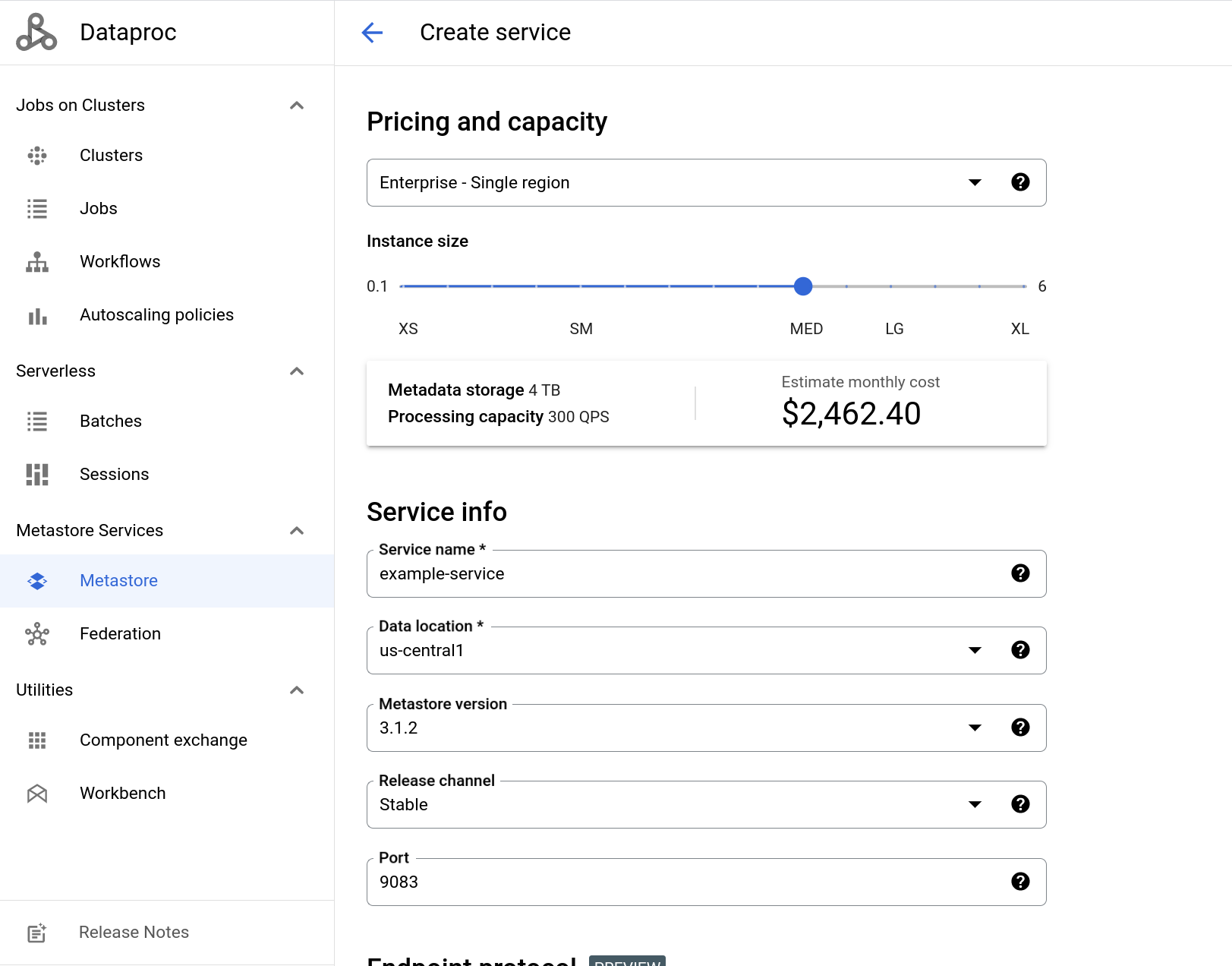

The following instructions show you how to create a basic Dataproc Metastore service using the provided default settings.

Console

In the Google Cloud console, go to the Dataproc Metastore page.

In the navigation menu, click +Create.

The Create Metastore service dialog opens.

Select Dataproc Metastore 2.

In the Service name field, enter

example-service.In the Data location field, select

us-central1.For the remaining service configuration options, use the provided defaults.

To create and start the service, click Submit.

Your new metastore service appears on the Dataproc Metastore page. The status displays Creating until the service is ready to use. When it's ready, the status changes to Active. Provisioning the service might take a couple of minutes.

The following screenshot shows an example of the Create service page using some of the provided defaults.

gcloud CLI

To create a metastore service using the provided defaults,

run the following gcloud metastore services create

command:

gcloud metastore services create example-service \

--location=us-central1 \

--instance-size=MEDIUMThis command creates a service named example-service in the default

region (us-central1) and with the default instance size (MEDIUM).

REST

Follow the API instructions to create a service by using the APIs Explorer.

Create a Dataproc cluster and connect to Dataproc Metastore

Next, you create a Dataproc cluster and connect to your metastore from the cluster. After that, your cluster uses the metastore service as it's HMS. The cluster you create here uses the default provided settings.

Console

In the Google Cloud console, go to the Dataproc Clusters page.

In the navigation bar, select +Create cluster.

The Create a cluster dialog opens providing multiple infrastructure choices that you can choose from.

In the Cluster on Compute Engine row, select Create.

The Create a Dataproc cluster on Compute Engine page opens.

In the Cluster Name field, enter

example-cluster.In the Region and Zone menus, select

us-central1.For the remaining Set up cluster options, use the provided defaults.

In the navigation menu, click the Customize cluster (optional) tab.

In the Dataproc Metastore section, select the metastore service you created earlier.

If you followed this tutorial as-is, it's named

example-service.For the remaining service configuration options, use the provided defaults.

To create the cluster, click Create.

Your new cluster appears in the Clusters list. The cluster status displays Provisioning until the cluster is ready to use. When it's ready, the status changes to Active. Provisioning the cluster might take a couple of minutes.

gcloud CLI

To create a cluster using the provided default settings, run the

following gcloud dataproc clusters create

command:

gcloud dataproc clusters create example-cluster \

--dataproc-metastore=projects/PROJECT_ID/locations/us-central1/services/example-service \

--region=us-central1Replace PROJECT_ID with the project ID of the

project that you created your Dataproc Metastore service in.

REST

Follow the API instructions to create a cluster by using the APIs Explorer.

Connect to Apache Hive with a Dataproc cluster

This next steps show you how to run some example commands in Apache Hive to create a database and a table.

Next, open an SSH session on the Dataproc cluster and launch a Hive session.

- In the Google Cloud console, go to the VM Instances page.

- In the list of virtual machine instances, click SSH next to

example-cluster.

A browser window opens in your home directory on the node with an output similar to the following:

Connected, host fingerprint: ssh-rsa ...

Linux cluster-1-m 3.16.0-0.bpo.4-amd64 ...

...

example-cluster@cluster-1-m:~$

To start Hive and create a database and table, run the following commands in the SSH session:

Start Hive.

hiveCreate a database called

myDatabase.create database myDatabase;Show the database you created.

show databases;Use the database you created.

use myDatabase;Create a table called

myTable.create table myTable(id int,name string);List the tables under

myDatabase.show tables;Describe the schema of the table you created.

desc MyTable;

Running these commands show an output similar to the following:

$hive

hive> show databases;

OK

default

hive> create database myDatabase;

OK

hive> use myDatabase;

OK

hive> create table myTable(id int,name string);

OK

hive> show tables;

OK

myTable

hive> desc myTable;

OK

id int

name string

Clean up

To avoid incurring charges to your Google Cloud account for the resources used on this page, follow these steps.

- In the Google Cloud console, go to the Manage resources page.

- If the project that you plan to delete is attached to an organization, expand the Organization list in the Name column.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

Alternatively, you can delete the resources used in this tutorial:

Delete the Dataproc Metastore service.

Console

In the Google Cloud console, open the Dataproc Metastore page:

In the service list, select

example-service.In the navigation bar, click Delete.

The Delete service dialog opens.

In the dialog, click Delete

Your service no longer appears in the Service list.

gcloud CLI

To delete your service, run the following

gcloud metastore services deletecommand.gcloud metastore services delete example-service \ --location=us-central1REST

Follow the API instructions to delete a service by using the APIs Explorer.

All deletions succeed immediately.

Delete the Cloud Storage bucket for the Dataproc Metastore service.

Delete the Dataproc cluster that used the Dataproc Metastore service.