This page contains information and examples for connecting to a Cloud SQL instance from a service running in Cloud Run.

For step-by-step instructions on running a Cloud Run sample web application connected to Cloud SQL, see the quickstart for connecting from Cloud Run.

Cloud SQL is a fully-managed database service that helps you set up, maintain, manage, and administer your relational databases in the cloud.

Cloud Run is a managed compute platform that lets you run containers directly on top of Google Cloud infrastructure.

Set up a Cloud SQL instance

- Enable the Cloud SQL Admin API in the Google Cloud project that you are connecting from, if you

haven't already done so:

- Create a Cloud SQL

for PostgreSQL instance. We recommend that you choose a Cloud SQL

instance location in the same region as your Cloud Run service for better latency, to avoid some networking costs, and to reduce

cross region failure risks.

By default, Cloud SQL assigns a public IP address to a new instance. You also have the option to assign a private IP address. For more information about the connectivity options for both, see the Connecting Overview page.

Configure Cloud Run

The steps to configure Cloud Run depend on the type of IP address that you assigned to your Cloud SQL instance. If you route all egress traffic through Direct VPC egress or a Serverless VPC Access connector, use a private IP address. Compare the two network egress methods.Public IP (default)

- Make sure that the instance has a public IP address. You can verify this on the Overview page for your instance in the Google Cloud console. If you need to add one, see the Configuring public IP page for instructions.

- Get the INSTANCE_CONNECTION_NAME for your instance. You can find

this value on the Overview page for your instance in the

Google Cloud console or by running the

following

gcloud sql instances describecommand:gcloud sql instances describe INSTANCE_NAME

- Get the CLOUD_RUN_SERVICE_ACCOUNT_NAME for your Cloud Run

service. You can find this value on the IAM page of the

project that's hosting the Cloud Run service in the

Google Cloud console or by

running the following

gcloud run services describecommand in the project that's hosting the Cloud Run service:gcloud run services describe CLOUD_RUN_SERVICE_NAME --region CLOUD_RUN_SERVICE_REGION --format="value(spec.template.spec.serviceAccountName)"

- CLOUD_RUN_SERVICE_NAME: the name of your Cloud Run service

- CLOUD_RUN_SERVICE_REGION: the region of your Cloud Run service

-

Configure the service account for your Cloud Run service. To

connect to Cloud SQL, make sure that the service account has the

Cloud SQL ClientIAM role. - If you're adding a Cloud SQL connection to a new service, you need to have your service containerized and uploaded to the Container Registry or Artifact Registry. If you don't already have a connection, then see these instructions about building and deploying a container image.

Like any configuration change, setting a new configuration for the Cloud SQL connection leads to the creation of a new Cloud Run revision. Subsequent revisions will also automatically get this Cloud SQL connection unless you make explicit updates to change it.

Console

-

Start configuring the service. To add Cloud SQL connections to an existing service, do the following:

- From the Services list, click the service name you want.

- Click Edit & deploy new revision.

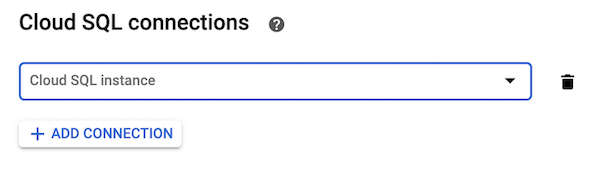

- Enable connecting to a Cloud SQL instance:

- Click Container(s) and then Settings.

- Scroll to Cloud SQL connections.

- Click Add connection.

- Click Enable the Cloud SQL Admin button if you haven't enabled the Cloud SQL Admin API yet.

- If you're adding a connection to a Cloud SQL instance in your project, then select the Cloud SQL instance you want from the menu.

- If you're using a Cloud SQL instance from another project, then select custom connection string in the menu and enter the full instance connection name in the format PROJECT-ID:REGION:INSTANCE-ID.

- To delete a connection, hold your cursor to the right of the connection to display the Delete icon, and click it.

-

Click Create or Deploy.

Command line

Before using any of the following commands, make the following replacements:

- IMAGE with the image you're deploying

- SERVICE_NAME with the name of your Cloud Run service

-

INSTANCE_CONNECTION_NAME with the instance connection name of your Cloud SQL instance, or a comma delimited list of connection names.

If you're deploying a new container, use the following command:

gcloud run deploy \ --image=IMAGE \ --add-cloudsql-instances=INSTANCE_CONNECTION_NAME

gcloud run services update SERVICE_NAME \ --add-cloudsql-instances=INSTANCE_CONNECTION_NAME

Terraform

The following code creates a base Cloud Run container, with a connected Cloud SQL instance.

-

Apply the changes by entering

terraform apply. - Verify the changes by checking the Cloud Run service, clicking the Revisions tab, and then the Connections tab.

Private IP

If the authorizing service account belongs to a different project than the one containing the Cloud SQL instance, do the following:

- In both projects, enable the Cloud SQL Admin API.

- For the service account in the project that contains the Cloud SQL instance, add the IAM permissions.

- Make sure that the Cloud SQL instance created previously has a private IP address. To add an internal IP address, see Configure private IP.

- Configure your egress method to connect to the same VPC network as your Cloud SQL instance. Note the following conditions:

- Direct VPC egress and Serverless VPC Access both support communication to VPC networks connected using Cloud VPN and VPC Network Peering.

- Direct VPC egress and Serverless VPC Access don't support legacy networks.

- Unless you're using Shared VPC, a connector must share the same project and region as the resource that uses it, although the connector can send traffic to resources in different regions.

- Connect using your instance's private IP address and port

5432.

Connect to Cloud SQL

After you configure Cloud Run, you can connect to your Cloud SQL instance.

Public IP (default)

For public IP paths, Cloud Run provides encryption and connects using the Cloud SQL Auth Proxy in two ways:

- Through Unix sockets

- By using a Cloud SQL connector

Use Secret Manager

Google recommends that you use Secret Manager to store sensitive information such as SQL credentials. You can pass secrets as environment variables or mount as a volume with Cloud Run.

After creating a secret in Secret Manager, update an existing service, with the following command:

Command line

gcloud run services update SERVICE_NAME \ --add-cloudsql-instances=INSTANCE_CONNECTION_NAME --update-env-vars=INSTANCE_CONNECTION_NAME=INSTANCE_CONNECTION_NAME_SECRET \ --update-secrets=DB_USER=DB_USER_SECRET:latest \ --update-secrets=DB_PASS=DB_PASS_SECRET:latest \ --update-secrets=DB_NAME=DB_NAME_SECRET:latest

Terraform

The following creates secret resources to securely hold the database user, password, and name values using google_secret_manager_secret and google_secret_manager_secret_version. Note that you must update the project compute service account to have access to each secret.

Update the main Cloud Run resource to include the new secrets.

Apply the changes by entering terraform apply.

The example command uses the secret version, latest; however, Google recommends pinning the secret to a specific version, SECRET_NAME:v1.

Private IP

For private IP paths, your application connects directly to your instance through a VPC network. This method uses TCP to connect directly to the Cloud SQL instance without using the Cloud SQL Auth Proxy.

Connect with TCP

Connect using the private IP address of your Cloud SQL instance as the host and port 5432.

Python

To see this snippet in the context of a web application, view the README on GitHub.

Java

To see this snippet in the context of a web application, view the README on GitHub.

Note:

- CLOUD_SQL_CONNECTION_NAME should be represented as <MY-PROJECT>:<INSTANCE-REGION>:<INSTANCE-NAME>

- Using the argument ipTypes=PRIVATE will force the SocketFactory to connect with an instance's associated private IP

- See the JDBC socket factory version requirements for the pom.xml file here .

Node.js

To see this snippet in the context of a web application, view the README on GitHub.

Go

To see this snippet in the context of a web application, view the README on GitHub.

C#

To see this snippet in the context of a web application, view the README on GitHub.

Ruby

To see this snippet in the context of a web application, view the README on GitHub.

PHP

To see this snippet in the context of a web application, view the README on GitHub.

Best practices and other information

You can use the Cloud SQL Auth Proxy when testing your application locally. See the quickstart for using the Cloud SQL Auth Proxy for detailed instructions.

You can also test using the Cloud SQL Proxy via a docker container.

Connection Pools

Connections to underlying databases may be dropped, either by the database server itself, or by the platform infrastructure. We recommend using a client library that supports connection pools that automatically reconnect broken client connections. For more detailed examples on how to use connection pools, see the Managing database connections page.Connection Limits

Both the MySQL and PostgreSQL editions of Cloud SQL impose a maximum limit on concurrent connections, and these limits may vary depending on the database engine chosen (see the Cloud SQL Quotas and Limits page).Cloud Run container instances are limited to 100 connections to a Cloud SQL database. Each instance of a Cloud Run service or job can have 100 connections to the database, and as this service or job scales, the total number of connections per deployment can grow.

You can limit the maximum number of connections used per instance by using a connection pool. For more detailed examples on how to limit the number of connections, see the Managing database connections page.

API Quota Limits

Cloud Run provides a mechanism that connects using the Cloud SQL Auth Proxy, which uses the Cloud SQL Admin API. API quota limits apply to the Cloud SQL Auth Proxy. The Cloud SQL Admin API quota used is approximately two times the number of Cloud SQL instances configured by the number of Cloud Run instances of a particular service deployed at any one time. You can cap or increase the number of Cloud Run instances to modify the expected API quota consumed.What's next

- Learn more about Cloud Run.

- Learn more about building and deploying container images.

- See a complete example in Python for using Cloud Run with PostgreSQL.