The Object detector model can identify and locate more than 500 types of objects in a video. The model accepts a video stream as input and outputs a protocol buffer with the detection results to BigQuery. The model runs at one FPS. When you create an app that uses the object detector model, you must direct model output to a BigQuery connector to view prediction output.

Object detector model app specifications

Use the following instructions to create a object detector model in the Google Cloud console.

Console

Create an app in the Google Cloud console

To create a object detector app, follow instructions in Build an application.

Add an object detector model

- When you add model nodes, select the Object detector from the list of pre-trained models.

Add a BigQuery connector

To use the output, connect the app to a BigQuery connector.

For information about using the BigQuery connector, see Connect and store data to BigQuery. For BigQuery pricing information, see the BigQuery pricing page.

View output results in BigQuery

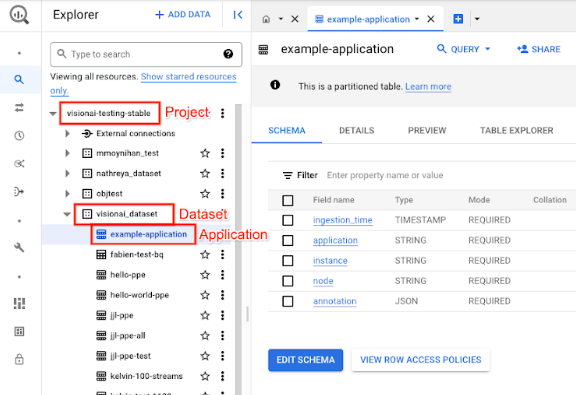

After the model outputs data to BigQuery, view output annotations in the BigQuery dashboard.

If you didn't specify a BigQuery path, you can view the system-created path in the Vertex AI Vision Studio page.

In the Google Cloud console, open the BigQuery page.

Select Expand next to the target project, dataset name, and application name.

In the table detail view, click Preview. View results in the annotation column. For a description of the output format, see model output.

The application stores results in chronological order. The oldest results are the beginning of the table, while the most recent results are added to the end of the table. To check the latest results, click the page number to go to the last table page.

Model output

The model outputs bounding boxes, their object labels, and confidence scores for each video frame. The output also contains a timestamp. The rate of the output stream is one frame per second.

In the protocol buffer output example that follows, note the following:

- Timestamp - The timestamp corresponds to the time for this inference result.

- Identified boxes - The main detection result that includes box identity, bounding box information, confidence score, and object prediction.

Sample annotation output JSON object

{

"currentTime": "2022-11-09T02:18:54.777154048Z",

"identifiedBoxes": [

{

"boxId":"0",

"normalizedBoundingBox": {

"xmin": 0.6963465,

"ymin": 0.23144785,

"width": 0.23944569,

"height": 0.3544306

},

"confidenceScore": 0.49874997,

"entity": {

"labelId": "0",

"labelString": "Houseplant"

}

}

]

}

Protocol buffer definition

// The prediction result protocol buffer for object detection

message ObjectDetectionPredictionResult {

// Current timestamp

protobuf.Timestamp timestamp = 1;

// The entity information for annotations from object detection prediction

// results

message Entity {

// Label id

int64 label_id = 1;

// The human-readable label string

string label_string = 2;

}

// The identified box contains the location and the entity of the object

message IdentifiedBox {

// An unique id for this box

int64 box_id = 1;

// Bounding Box in normalized coordinates [0,1]

message NormalizedBoundingBox {

// Min in x coordinate

float xmin = 1;

// Min in y coordinate

float ymin = 2;

// Width of the bounding box

float width = 3;

// Height of the bounding box

float height = 4;

}

// Bounding Box in the normalized coordinates

NormalizedBoundingBox normalized_bounding_box = 2;

// Confidence score associated with this bounding box

float confidence_score = 3;

// Entity of this box

Entity entity = 4;

}

// A list of identified boxes

repeated IdentifiedBox identified_boxes = 2;

}

Best practices and limitations

To get the best results when you use the object detector, consider the following when you source data and use the model.

Source data recommendations

Recommended: Make sure the objects in the picture are clear and are not covered or largely obscured by other objects.

Sample image data the object detector is able to process correctly:

|

Sending the model this image data returns the following object detection information*:

* The annotations in the following image are for illustrative purposes only. The bounding boxes, labels, and confidence scores are manually drawn and not added by the model or any Google Cloud console tool.

Not recommended: Avoid image data where the key object items are too small in the frame.

Sample image data the object detector isn't able to process correctly:

|

Not recommended: Avoid image data that show the key object items partially or fully covered by other objects.

Sample image data the object detector isn't able to process correctly:

|

Limitations

- Video resolution: The recommended maximum input video resolution is 1920 x 1080, and the recommended minimum resolution is 160 x 120.

- Lighting: The model performance is sensitive to lighting conditions. Extreme brightness or darkness might lead to lower detection quality.

- Object size: The object detector has a minimal detectable object size. Make sure the target objects are sufficiently large and visible in your video data.