Objectives

This tutorial shows you how to install the Dataproc Jupyter component on a new cluster, and then connect to the Jupyter notebook UI running on the cluster from your local browser using the Dataproc Component Gateway.

Costs

In this document, you use the following billable components of Google Cloud:

To generate a cost estimate based on your projected usage,

use the pricing calculator.

Before you begin

If you haven't already done so, create a Google Cloud project and a Cloud Storage bucket.

Setting up your project

- Sign in to your Google Cloud account. If you're new to Google Cloud, create an account to evaluate how our products perform in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

-

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

Enable the Dataproc, Compute Engine, and Cloud Storage APIs.

- Install the Google Cloud CLI.

-

To initialize the gcloud CLI, run the following command:

gcloud init -

In the Google Cloud console, on the project selector page, select or create a Google Cloud project.

-

Make sure that billing is enabled for your Google Cloud project.

-

Enable the Dataproc, Compute Engine, and Cloud Storage APIs.

- Install the Google Cloud CLI.

-

To initialize the gcloud CLI, run the following command:

gcloud init

Creating a Cloud Storage bucket in your project to store any notebooks you create in this tutorial.

- In the Google Cloud console, go to the Cloud Storage Buckets page.

- Click Create bucket.

- On the Create a bucket page, enter your bucket information. To go to the next

step, click Continue.

- For Name your bucket, enter a name that meets the bucket naming requirements.

-

For Choose where to store your data, do the following:

- Select a Location type option.

- Select a Location option.

- For Choose a default storage class for your data, select a storage class.

- For Choose how to control access to objects, select an Access control option.

- For Advanced settings (optional), specify an encryption method, a retention policy, or bucket labels.

- Click Create. Your notebooks will be stored in Cloud Storage under

gs://bucket-name/notebooks/jupyter.

Create a cluster and install the Jupyter component

Create a cluster with the installed Jupyter component.

Open the Jupyter and JupyterLab UIs

Click the Google Cloud console Component Gateway links in the Google Cloud console to open the Jupyter notebook or JupyterLab UIs running on your cluster's master node.

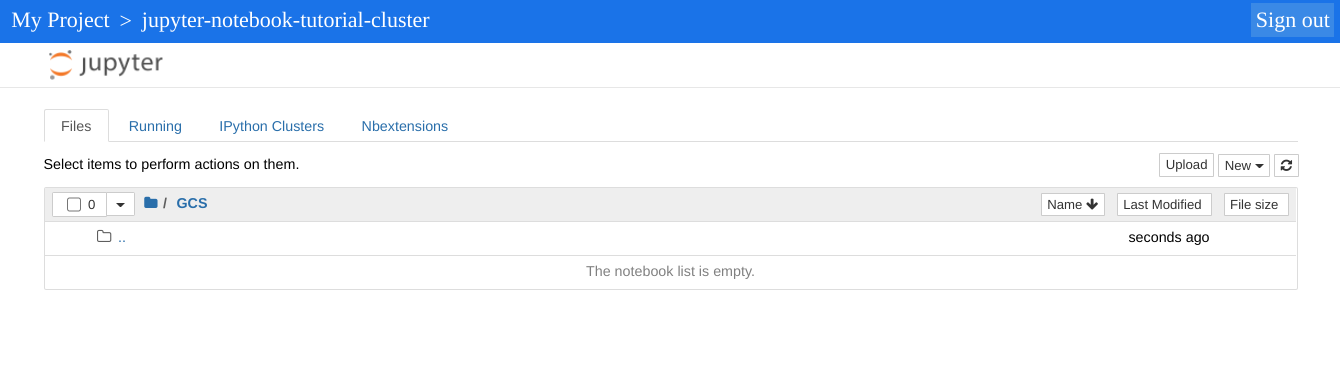

The top-level directory displayed by your Jupyter instance is a virtual directory that allows you to see the contents of either your Cloud Storage bucket or your local file system. You can choose either location by clicking on the GCS link for Cloud Storage or Local Disk for the local filesystem of the master node in your cluster.

- Click the GCS link. The Jupyter notebook web UI displays

notebooks stored in your Cloud Storage bucket, including any

notebooks you create in this tutorial.

Clean up

After you finish the tutorial, you can clean up the resources that you created so that they stop using quota and incurring charges. The following sections describe how to delete or turn off these resources.

Deleting the project

The easiest way to eliminate billing is to delete the project that you created for the tutorial.

To delete the project:

- In the Google Cloud console, go to the Manage resources page.

- In the project list, select the project that you want to delete, and then click Delete.

- In the dialog, type the project ID, and then click Shut down to delete the project.

Deleting the cluster

- To delete your cluster:

gcloud dataproc clusters delete cluster-name \ --region=${REGION}

Deleting the bucket

- To delete the Cloud Storage bucket you created in

Before you begin, step 2, including the notebooks

stored in the bucket:

gcloud storage rm gs://${BUCKET_NAME} --recursive