Google Play transfers

The BigQuery Data Transfer Service for Google Play connector lets you automatically schedule and manage recurring load jobs for Google Play reporting data.

Supported Reports

The BigQuery Data Transfer Service for Google Play supports the following monthly reporting options:

Detailed reports

Aggregated reports

For information on how Google Play reports are transformed into BigQuery tables, see Google Play report transformations.

| Reporting option | Support |

|---|---|

| Supported API version | N/A |

| Repeat frequency | Daily, at the time the data transfer is first created (default) You can configure the time of day. |

| Refresh window | Last 7 days Not configurable |

| Maximum backfill duration | No limit While Google Play has no known data retention limits, the BigQuery Data Transfer Service has limits on how many days can be requested in a single backfill. For information on backfills, see Manually trigger a transfer. |

Data ingestion from Google Play transfers

When you transfer data from Google Play into BigQuery, the data is loaded into BigQuery tables that are partitioned by date. The table partition that the data is loaded into corresponds to the date from the data source. If you schedule multiple transfers for the same date, BigQuery Data Transfer Service overwrites the partition for that specific date with the latest data. Multiple transfers in the same day or running backfills don't result in duplicate data, and partitions for other dates are not affected.Refresh windows

A refresh window is the number of days that a data transfer retrieves data when a data transfer occurs. For example, if the refresh window is three days and a daily transfer occurs, the BigQuery Data Transfer Service retrieves all data from your source table from the past three days. In this example, when a daily transfer occurs, the BigQuery Data Transfer Service creates a new BigQuery destination table partition with a copy of your source table data from the current day, then automatically triggers backfill runs to update the BigQuery destination table partitions with your source table data from the past two days. The automatically triggered backfill runs will either overwrite or incrementally update your BigQuery destination table, depending on whether or not incremental updates are supported in the BigQuery Data Transfer Service connector.

When you run a data transfer for the first time, the data transfer retrieves all source data available within the refresh window. For example, if the refresh window is three days and you run the data transfer for the first time, the BigQuery Data Transfer Service retrieves all source data within three days.

Refresh windows are mapped to the TransferConfig.data_refresh_window_days API field.

To retrieve data outside the refresh window, such as historical data, or to recover data from any transfer outages or gaps, you can initiate or schedule a backfill run.

Limitations

- The minimum frequency that you can schedule a data transfer for is once every 24 hours. By default, a transfer starts at the time that you create the transfer. However, you can configure the transfer start time when you set up your transfer.

- The BigQuery Data Transfer Service does not support incremental data transfers during a Google Play transfer. When you specify a date for a data transfer, all of the data that is available for that date is transferred.

Before you begin

Before you create a Google Play data transfer:

- Verify that you have completed all actions required to enable the BigQuery Data Transfer Service.

- Create a BigQuery dataset to store the Google Play data.

- Find your Cloud Storage bucket:

- In the Google Play console, click Download reports and select Reviews, Statistics, or Financial.

- To copy the ID for your Cloud Storage bucket, click

Copy Cloud Storage URI.

Your bucket ID begins with

gs://. For example, for the reviews report, your ID is similar to the following:gs://pubsite_prod_rev_01234567890987654321/reviews

- For the Google Play data transfer, you need to copy only the unique ID that comes between

gs://and/reviews:pubsite_prod_rev_01234567890987654321

- If you intend to setup transfer run notifications for Pub/Sub, you

must have

pubsub.topics.setIamPolicypermissions. Pub/Sub permissions are not required if you just set up email notifications. For more information, see BigQuery Data Transfer Service run notifications.

Required permissions

BigQuery: Ensure that the person creating the data transfer has the following permissions in BigQuery:

bigquery.transfers.updatepermissions to create the data transfer- Both

bigquery.datasets.getandbigquery.datasets.updatepermissions on the target dataset

The

bigquery.adminpredefined IAM role includesbigquery.transfers.update,bigquery.datasets.updateandbigquery.datasets.getpermissions. For more information on IAM roles in BigQuery Data Transfer Service, see Access control.Google Play: Ensure that you have the following permissions in Google Play:

- You must have reporting access in the Google Play console.

The Google Cloud team does NOT have the ability to generate or grant access to Google Play files on your behalf. See Contact Google Play support for help accessing Google Play files.

Set up a Google Play transfer

Setting up a Google Play data transfer requires a:

- Cloud Storage bucket. Steps for locating your Cloud Storage bucket are

described in Before you begin.

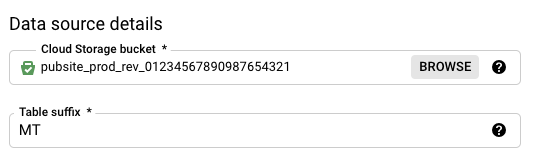

Your Cloud Storage bucket begins with

pubsite_prod_rev. For example:pubsite_prod_rev_01234567890987654321. - Table suffix: A user-friendly name for all data sources loading into the same dataset. The suffix is used to prevent separate transfers from writing to the same tables. The table suffix must be unique across all transfers that load data into the same dataset, and the suffix should be short to minimize the length of the resulting table name.

To set up a Google Play data transfer:

Console

Go to the Data transfers page in the Google Cloud console.

Click Create transfer.

On the Create Transfer page:

In the Source type section, for Source, choose Google Play.

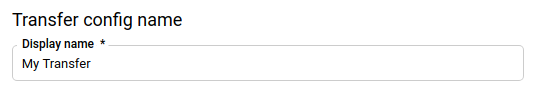

In the Transfer config name section, for Display name, enter a name for the data transfer such as

My Transfer. The transfer name can be any value that lets you identify the transfer if you need to modify it later.

In the Schedule options section:

- For Repeat frequency, choose an option for how often to run the data transfer. If you select Days, provide a valid time in UTC.

- If applicable, select either Start now or Start at set time, and provide a start date and run time.

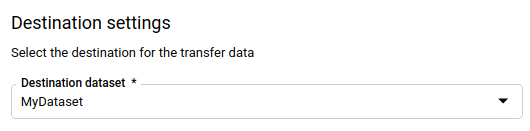

In the Destination settings section, for Destination dataset, choose the dataset that you created to store your data.

In the Data source details section:

- For Cloud Storage bucket, enter the ID for your Cloud Storage bucket.

- For Table suffix, enter a suffix such as

MT(forMy Transfer).

In the Service Account menu, select a service account from the service accounts that are associated with your Google Cloud project. You can associate a service account with your data transfer instead of using your user credentials. For more information about using service accounts with data transfers, see Use service accounts.

- If you signed in with a federated identity, then a service account is required to create a data transfer. If you signed in with a Google Account, then a service account for the transfer is optional.

- The service account must have the required permissions.

(Optional) In the Notification options section:

- Click the toggle to enable email notifications. When you enable this option, the transfer administrator receives an email notification when a transfer run fails.

- For Select a Pub/Sub topic, choose your topic name or click Create a topic. This option configures Pub/Sub run notifications for your transfer.

Click Save.

bq

Enter the bq mk command and supply the transfer creation flag —

--transfer_config. The following flags are also required:

--target_dataset--display_name--params--data_source

bq mk \ --transfer_config \ --project_id=project_id \ --target_dataset=dataset \ --display_name=name \ --params='parameters' \ --data_source=data_source --service_account_name=service_account_name

Where:

- project_id is your project ID. If

--project_idisn't specified, the default project is used. - dataset is the target dataset for the transfer configuration.

- name is the display name for the transfer configuration. The data transfer name can be any value that lets you identify the transfer if you need to modify it later.

- parameters contains the parameters for the created transfer

configuration in JSON format. For example:

--params='{"param":"param_value"}'. For Google Play, you must supply thebucketandtable_suffix, parameters.bucketis the Cloud Storage bucket that contains your Play report files. - data_source is the data source:

play. - service_account_name is the service account name used to

authenticate your data transfer. The service account

should be owned by the same

project_idused to create the transfer and it should have all of the required permissions.

For example, the following command creates a Google Play data transfer named My

Transfer using Cloud Storage bucket pubsite_prod_rev_01234567890987654321

and target dataset mydataset. The data transfer is created in the default

project:

bq mk \

--transfer_config \

--target_dataset=mydataset \

--display_name='My Transfer' \

--params='{"bucket":"pubsite_prod_rev_01234567890987654321","table_suffix":"MT"}' \

--data_source=play

The first time you run the command, you will receive a message like the following:

[URL omitted] Please copy and paste the above URL into your web browser and

follow the instructions to retrieve an authentication code.

Follow the instructions in the message and paste the authentication code on the command line.

API

Use the projects.locations.transferConfigs.create

method and supply an instance of the TransferConfig

resource.

Java

Before trying this sample, follow the Java setup instructions in the BigQuery quickstart using client libraries. For more information, see the BigQuery Java API reference documentation.

To authenticate to BigQuery, set up Application Default Credentials. For more information, see Set up authentication for client libraries.

Troubleshoot Google Play transfer set up

If you are having issues setting up your data transfer, see Troubleshooting BigQuery Data Transfer Service transfer setup.

Query your data

When your data is transferred to BigQuery, the data is written to ingestion-time partitioned tables. For more information, see Introduction to partitioned tables.

If you query your tables directly instead of using the auto-generated views, you

must use the _PARTITIONTIME pseudocolumn in your query. For more information,

see Querying partitioned tables.

Pricing

For information on Google Play data transfer pricing, see the Pricing page.

Once data is transferred to BigQuery, standard BigQuery storage and query pricing applies.

What's next

- To see how your Google Play reports are transferred to BigQuery, see Google Play report transformations.

- For an overview of BigQuery Data Transfer Service, see Introduction to BigQuery Data Transfer Service.

- For information on using transfers including getting information about a transfer configuration, listing transfer configurations, and viewing a transfer's run history, see Working with transfers.